Jake Austin (jake-austin@berkeley.edu), Lucas Armand (lucasarmand@berkeley.edu), Ellie Hubach (elliehubach@berkeley.edu)

Neural Radiance Fields are a powerful way to represent 3D scenes, but inaccurately capture specular transparent surfaces like panes of glass, which are common in real world data. For a point in a NeRF to emit, if must also occlude, which can make it difficult to accurately model glass which may have bright specularities, but also transmits light from most angles. We propose to make a small change that can be applied on top of any existing NeRF method to improve performance on these specular transparent surfaces, by decoupling emission and absorption.

While Neural Radiance Fields have emerged in recent years for their high quality reconstructions, there are some fundamental flaws. Many of these flaws emerge from the fact that we are (notable exceptions including RawNeRF) working by volume rendering of the 3 dimensional pixel color (obtained from processed images that have been individually pre-processed and color values clipped), not raw photon counts in the range of [0, inf]. In other words: while NeRFs provide a nice approximation of light transport, they are not physically accurate due in part to using post-processed RGB images where color exists only between [0, 1].

This largely doesn't impact the quality of NeRFs, but in the case of specular transparencies like panes of glass that can be common in the real world, the NeRF will be forced to do something suboptimal, and waste network capacity to learn "active camouflage" to make the glass appear transparent from off angles from the specular reflection, due to that if the glass wants to emit, it must be dense, which means it also must occlude incoming light. For this, we propose learning an additional absorption field (that can simply be an additional output logit from the MLP alongside density), that will be included in an updated volume rendering equation.

Our desired solution comes from the initial NeRF volume rendering equation.

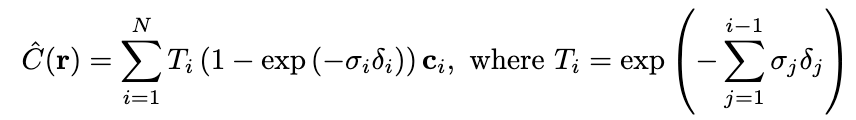

Below is the NeRF volume rendering equation:

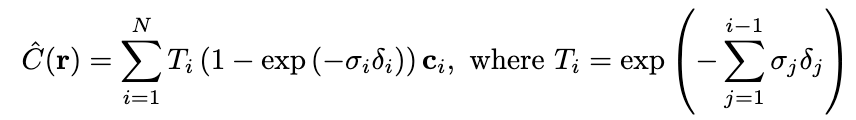

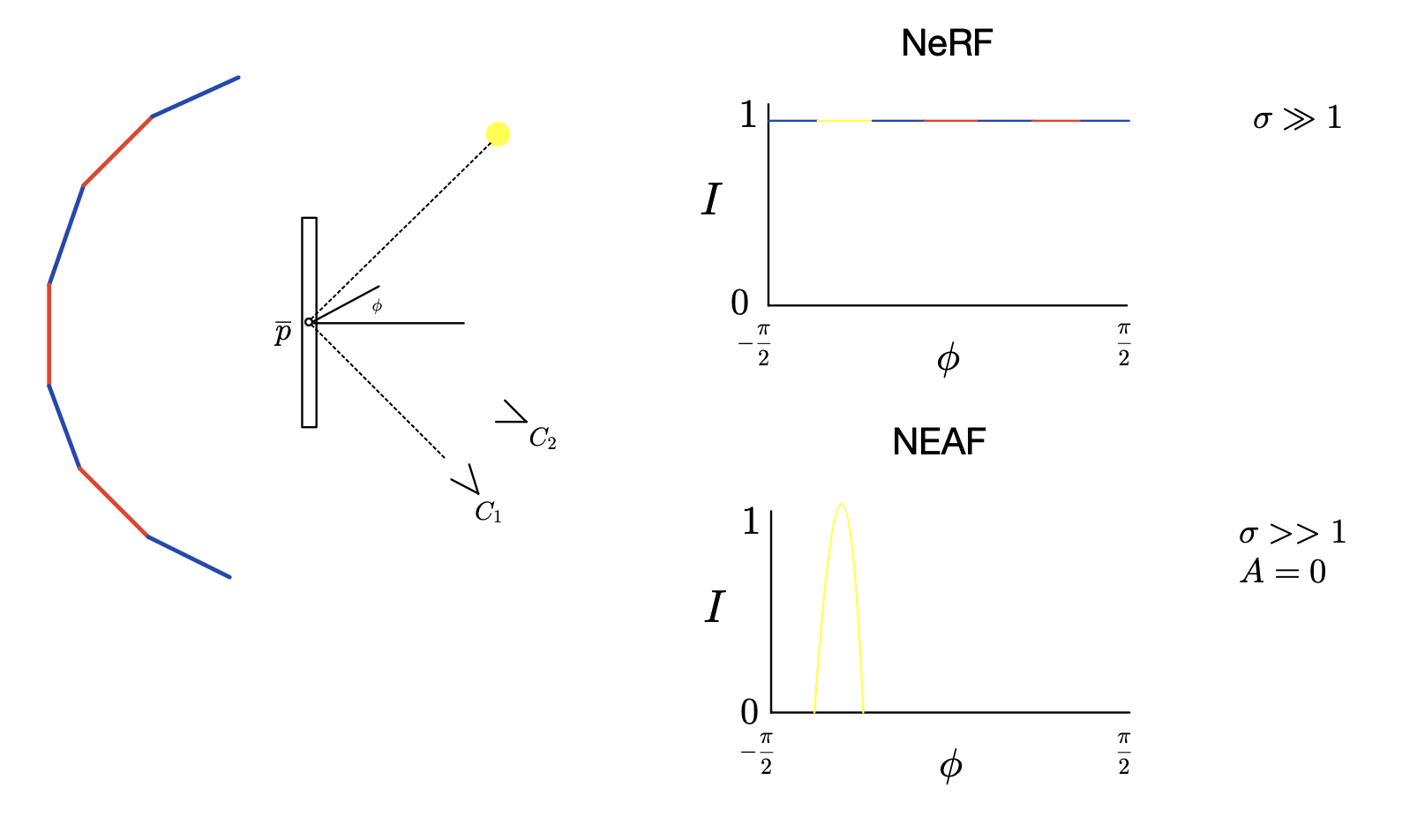

What we dislike about this equation is the transmittence calculation Ti is dependent entirely on density, the quantity that determines how much color a point really can emit. What we propose is to have another field in addition to just density and color. A(x, y, z) will be a positon dependent field between [0, 1] that will modify the density value in the transmittance calculation.

Below is our new volume rendering equation

You can see that by this simple addition, we are enabling a point to have both the density that enables contribution of color, and also transparency enabling color to pass through (even through points that have density).

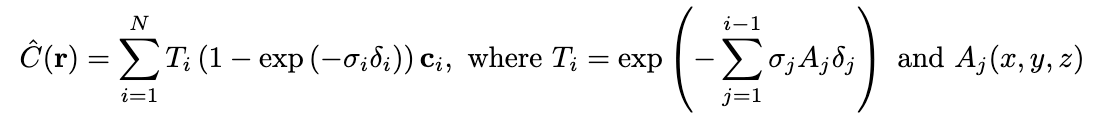

Below is an example case where we have a pane of glass sitting in front of a high frequency background, with one source of light off to the side that can be observed on the glass at point p from the perspective of camera C1.

In this example, let's imagine we are modeling the scene with a NeRF. If we want point p to emit some light and contribute to the rendered color (since it does have a specularity), it must have a lot of density to override the color behind it. If the point p has density, then it also necessarily occludes, but what about when we view point p from different angles? Point p occludes light, meaning point p must lean a view dependent color that mimics the background, even if the background is high frequency and difficult to model, not to mention wasting valuable network capacity.

Let's now imagine training a NEAF. The glass still has density, same as the NeRF, except now we can easily learn absorption A(p) == 0. With this, point p can still contribute color, adding to the existing color to mimic a specularity, while not occluding at all. Furthermore, the function that the RGB field learns is simply an impulse of yellow light at the specular angle, with zero color elsewhere, a far easier function for the neural network to learn, enabling it to use that saved network capacity elsewhere.

The problems encountered from putting neaf on top of nerfacto were mainly around numerical imprecisions (nerfacto uses fp16) and exploding gradients that required a small regularizer to prevent the weights that we calculate for compositing the sampled colors along the ray from being greater than 1. Standard NeRF has the mathmatical guarentee that the calculated weights (wi = Ti (1-exp(-sigma_igamma_i))) for each color sample along a ray sum to less than or equal to 1. We lose this guarentee, so a very small regularizer to penalize the NEAF for having weights that sum to greater than 1 is required.

COLMAP / HLOC were also obnoxious since we are explicitly trying to test on scenes with heavy reflections from glass, which makes it particularly difficult for COLMAP/HLOC to localize frames, and we found for a number of scenes that we wanted to test on that heavy tuning of HLOC parameters was needed. Even then some of the rendered poses would be clearly incorrect / inconsistent with other frames upon inspection, limiting our ability to test our method on hand collected data, which is why we primarily report PSNR/SSIM/LPIPS results with nerfstudio's default data.

Furthermore, we were unable to hit the milestone of implementing NEAF on top of ZipNeRF. Progress was made in that the proposal sampler loss seemed to work fine, and we identified how to use tinycudann for efficient hashgrids, but ZipNeRF had issues that were unanticipated like requiring prohibitively large batch sizes to train (if the appendix is to be believed), and too much VRAM for consumer cards. Our progress on this can be found here: https://github.com/jake-austin/zipnerf-nerfstudio, with the plan being to continue work on this intermittently with the help of nerfstudio contributors to get this merged into nerfstudio as a supported method down the line with hopefully less compute requirements.

Finally, with our Instant-NGP NEAF implementation, there were difficulties surrounding environments and requirements. We had the misfortune of discovering that nerfstudio incremented some of the requirements for libraries like nerfacc after Lucas had installed his environment, causing the nerfstudio main branch's instant-ngp code (which uses nerfacc directly, that we were starting our NEAF instant-ngp implementation from) to be incompatible with our installed environments, making it difficult as to create our NEAFs, we started with the fields / models implemented on the nerfstudio github.

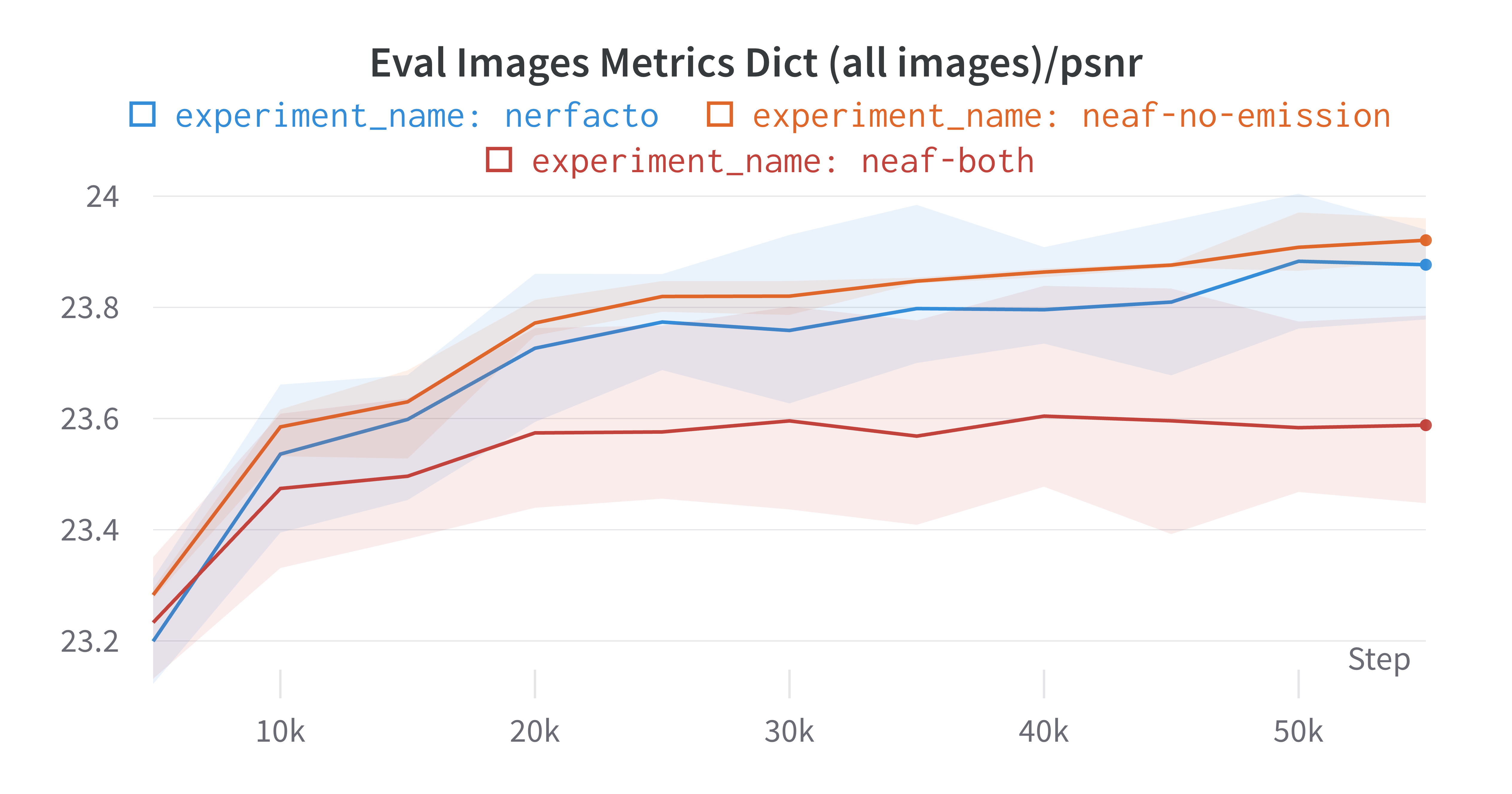

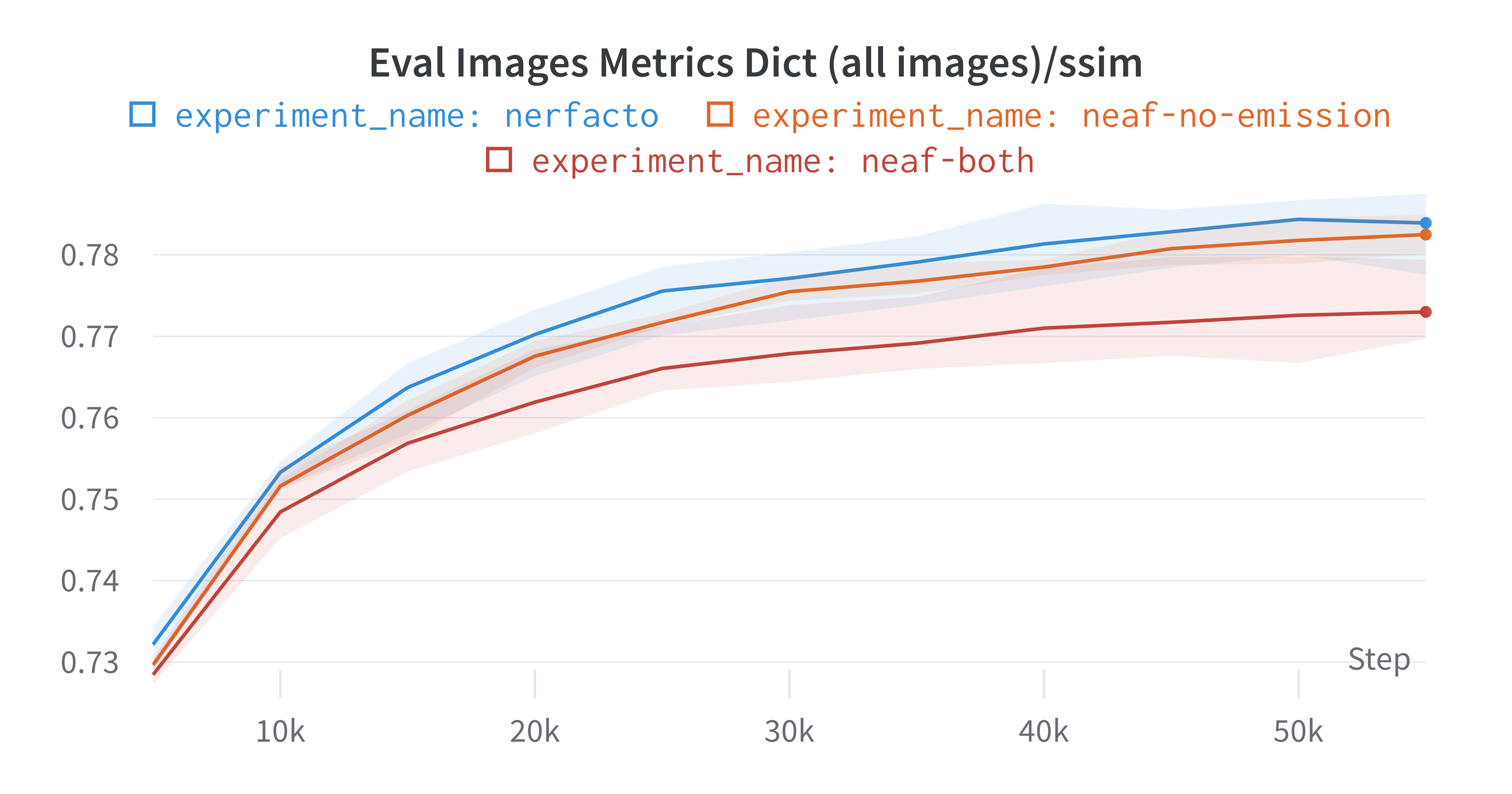

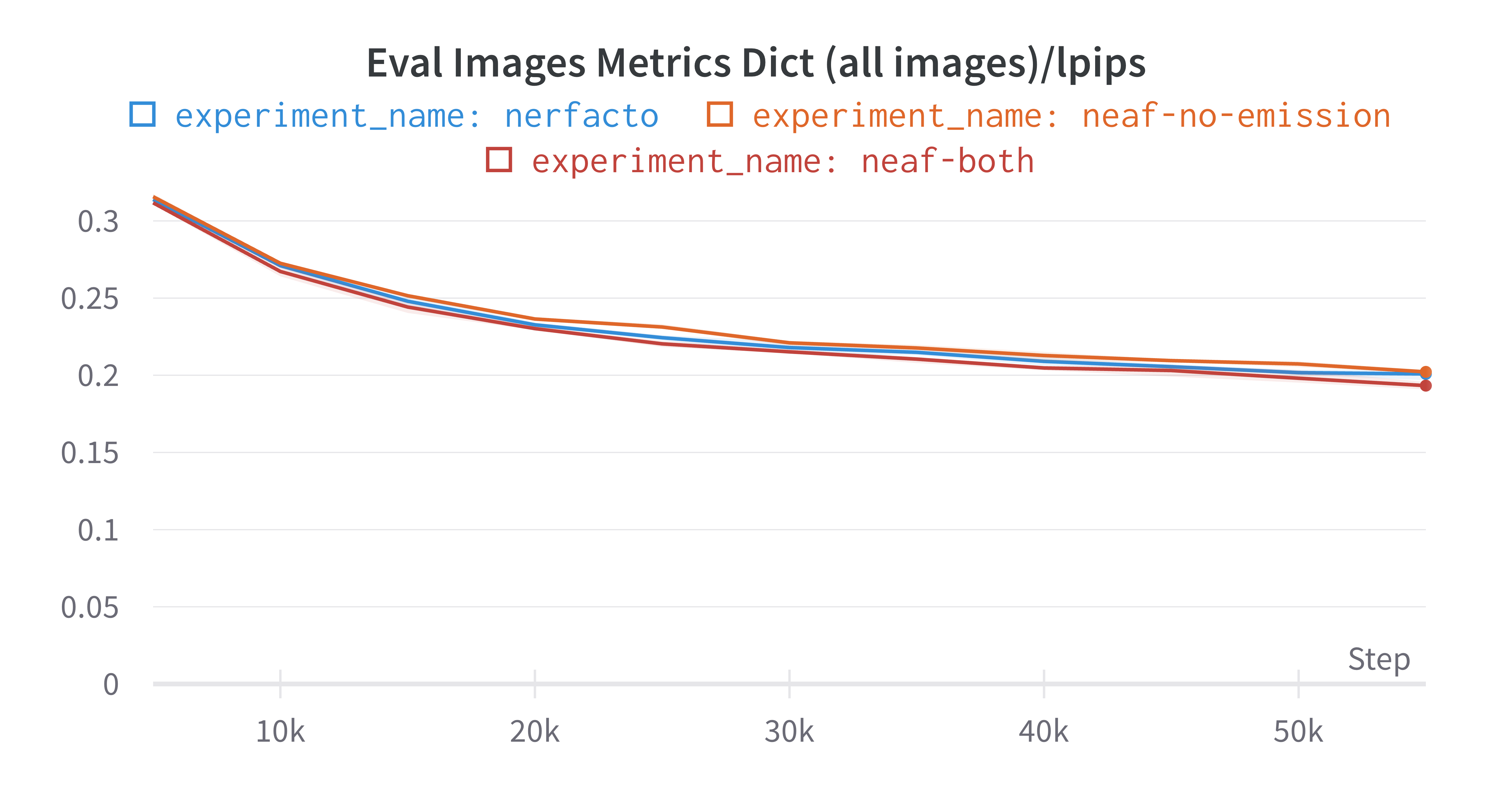

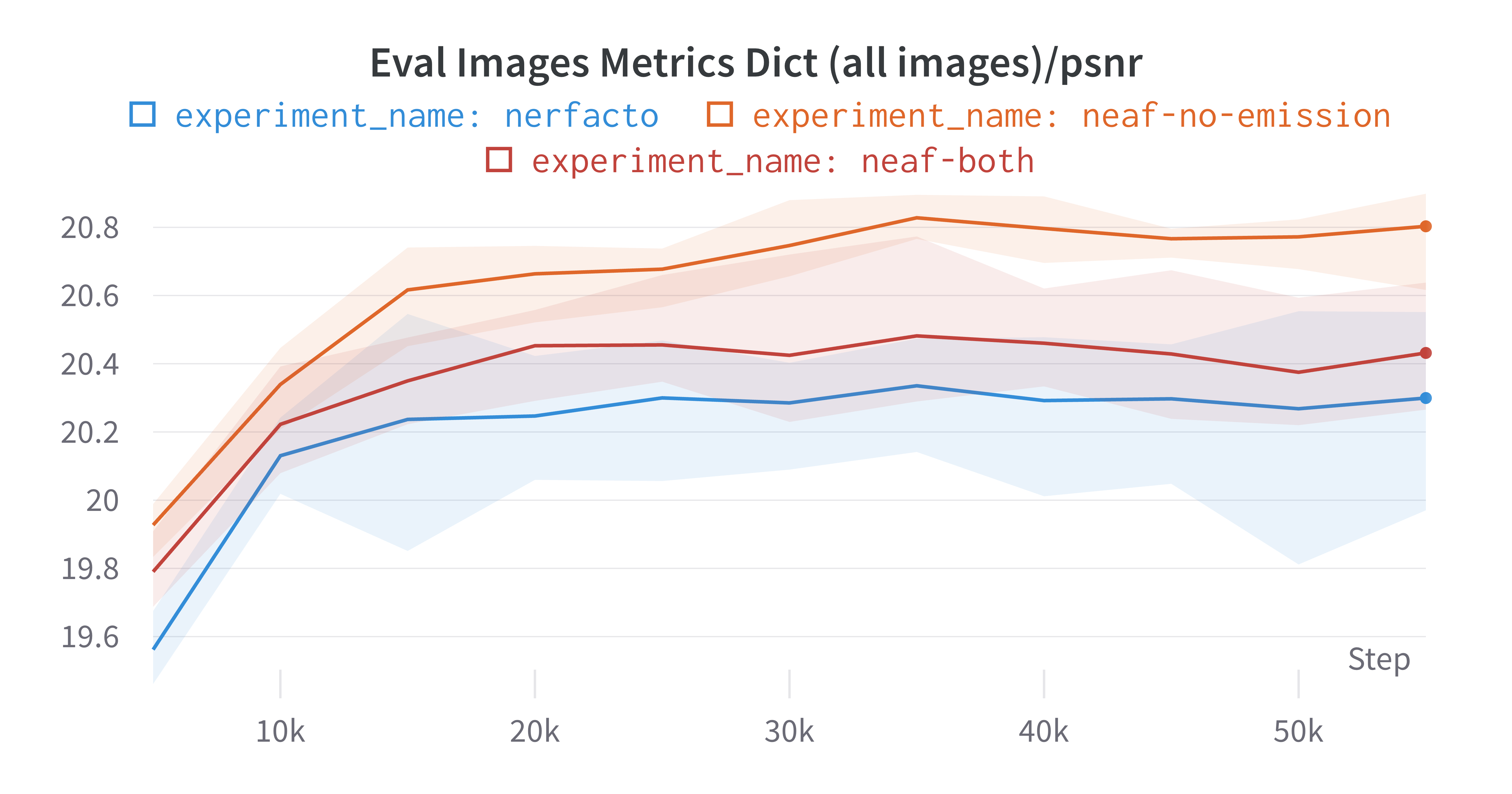

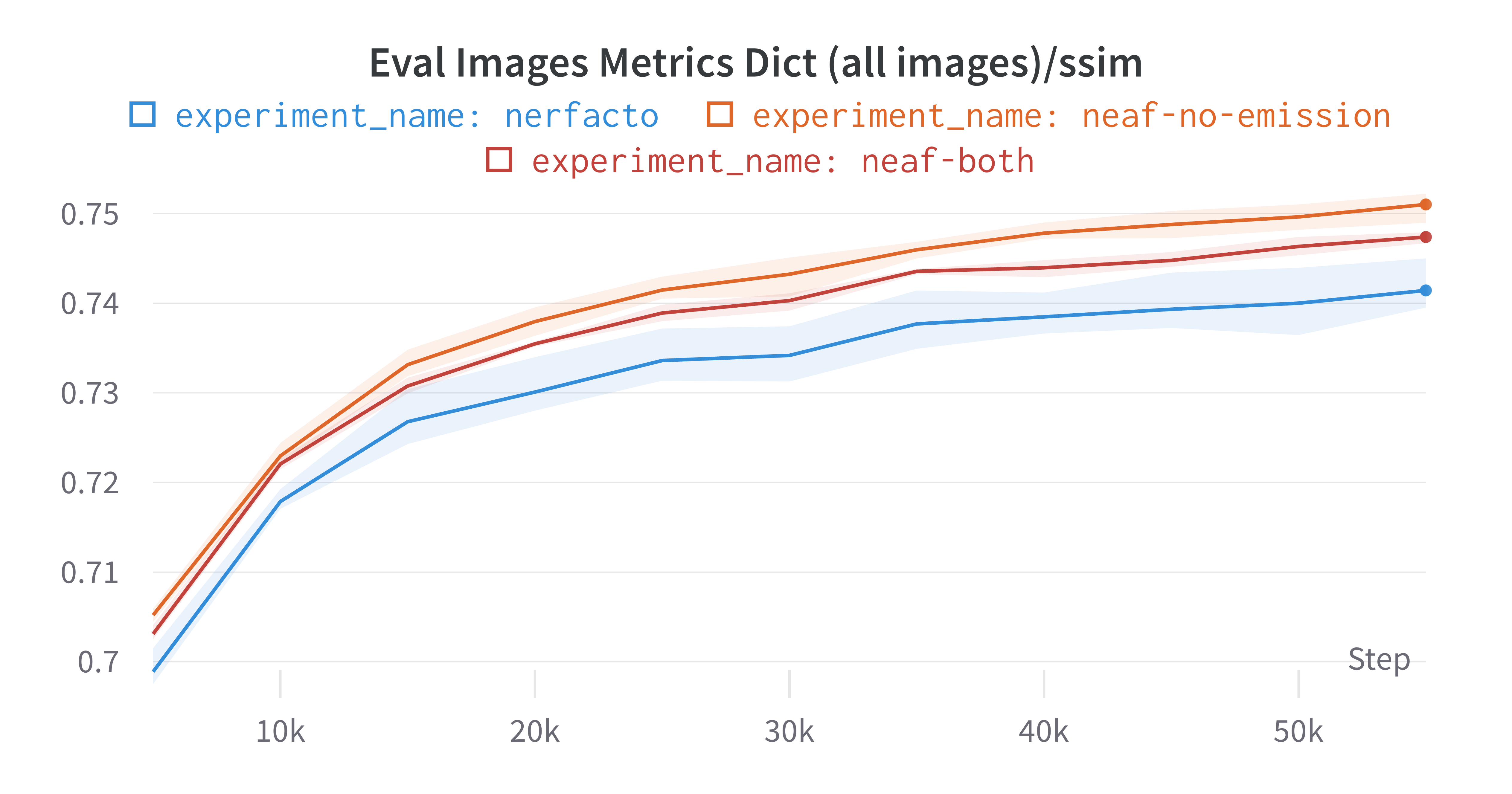

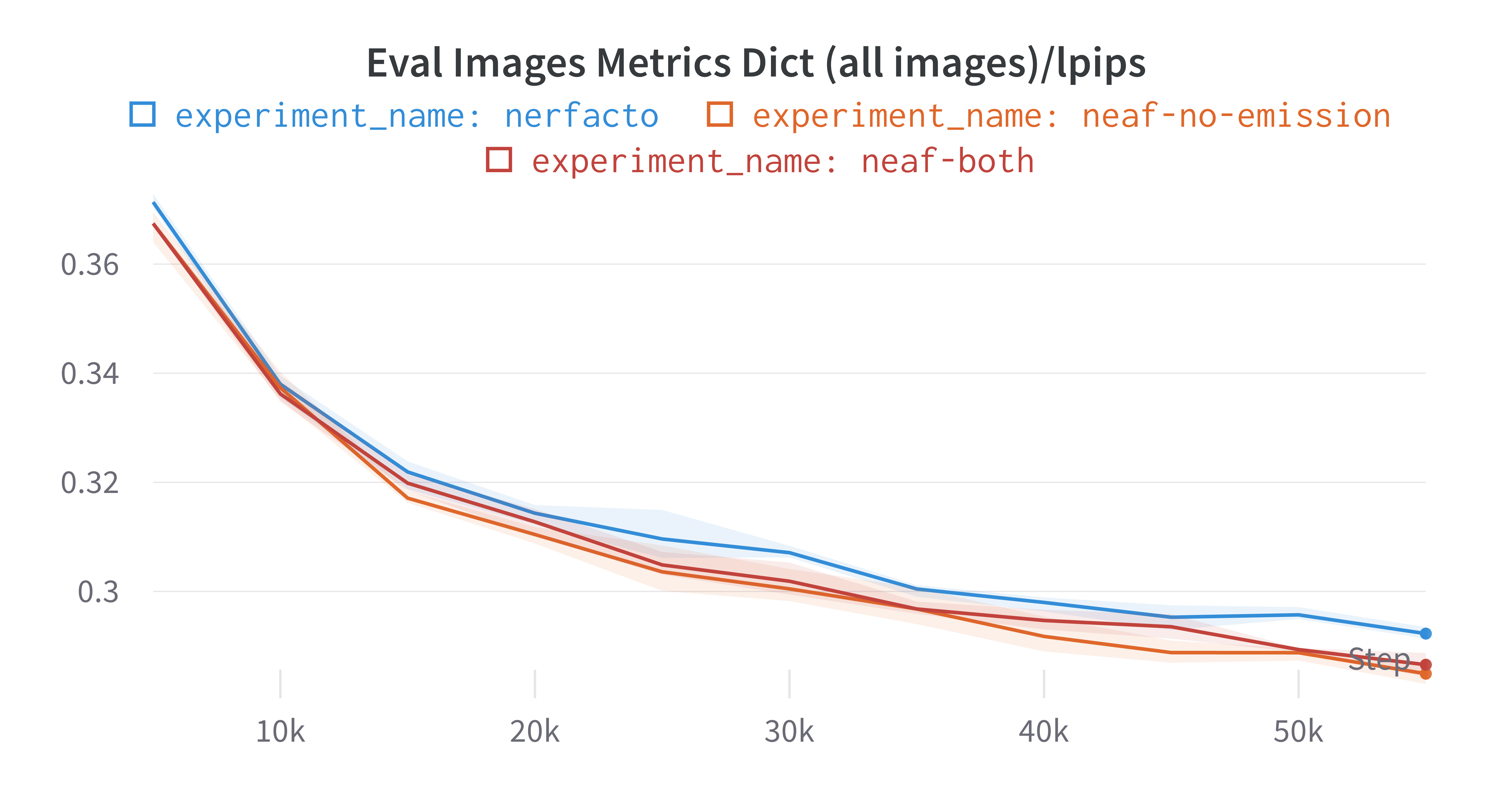

We have found that on both scenes, NEAF matches or improves performance on the original nerfacto on all metrics, with as much as 5% better PSNR, SSIM, LPIPS output of the box without any hyperparameters being tuned. The one caveat is that we are bumping up the number of training rays to 8k from the default 4k for both our nerfacto benchmark and the neaf derived from nerfacto. We run 3 seeds and plot the mean and min/max seeds for each method.

Below are our results. Nerfacto is the default nerfacto method, neaf-no-emission is the standard neaf method we are shipping on top of nerfacto, and neaf-both is an abblation where we include an emission coefficient modifying the emissive capability of density in the same way we modify the absorption capability of density.

Berkeley Way West Entrance:

Nerfstudio Dozer:

We can further show qualitative results that demonstrate how our method is capable of both brighter specularities and also better glass transmittence. The following are images from our final renders from our best NEAF nerfacto run and our best regular nerfacto run.

NEAF:

Nerfacto:

You can clearly see that our neaf method is capable of getting better color transmittence of the wheels from the cars in the background, while also getting brigher specularities off the back window of the dozer, something that would require the back window to be 100% occluding in NeRF.

We can also visualize the absorption field using the same volume rendering weights that we use for the color

You can clearly see that in areas where parallax matters (ie: where we have many angles on a point in space in our training data, and the color isn't uniform), we have high absorption in general, except on the windows of the dozer, which are low absortption.

In the graphs above, we included neaf-both, an ablation in which we not only learn an absorption coefficient, but also an emission coefficient. Density is used twice in the volume rendering equation, once for determining occlusion/transmittence and once for determining the amount of color added at a given point in space. We ablated whether it would be useful to go further and add an additional coefficient between [0,1] to scale the emission at a certain point. This simply multiplies the density term that *isn't* in the calculation of Ti

Quantitatively you can see worse PSNR/SSIM/LPIPS compared to the NEAF with no emission coefficient. It was unlikely that it would work out well since it is an overparameterization of the scene, with more parameters in the volume rendering equation than there are degrees of freedom. If you lock emission at .5 for the whole scene, density can learn to be twice as big to account for that, and absorption half as big to account for the change in density. This results in redundancy in terms of the number of ways the model can express the same thing, leading to more overfitting.

[1] NeRF: https://arxiv.org/abs/2003.08934

[2] Instant-NGP: https://nvlabs.github.io/instant-ngp/assets/mueller2022instant.pdf

[3] ZipNeRF: https://arxiv.org/abs/2304.06706

[4] Nerfstudio: https://github.com/nerfstudio-project/nerfstudio

Work was split up well. Jake and Lucas had access to more compute, tackling the nerfacto and instant-ngp implementations respectively. Jake tried and failed to make the zipnerf implementation in time. Lucas collected data and generated the NeRFs for the room and sliding glass door. Ellie worked on data collection / preprocessing and infra.